Split testing is a marketing technique used to identify and measure the influence individual campaign characteristics have on performance. The value of split testing has long been understood in web design, email marketing, and other tech disciplines, and part of what makes it appealing to mobile marketers for re-engagement is its ability to unlock incremental improvement. For mobile marketers struggling with the challenge of maintaining an active user base, split testing can serve as a powerful re-engagement tool.

How Split Testing Works

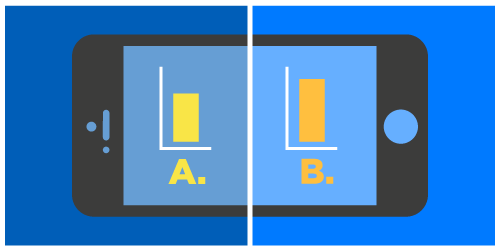

Implementing a split test (aka A/B test, A/B split test, bucket test) requires setting up a controlled campaign (A), and making a deliberate change to a second campaign (B). The two campaigns are sent to separate groups of users and performance data is measured. The data derived from the test enables you to compare the performance of two slightly different campaigns in a side-by-side, controlled manner, and identify the factors that lead to higher conversion rates.

Split tests should focus on measuring the impact of one specific characteristic. Changing multiple variables within the same test makes it difficult to determine which factor is actually responsible for altering performance. Focusing on a single factor enables you to understand the impact of each characteristic, and steadily improve your campaigns over time.

Factors to Evaluate

In theory, every individual component of your re-engagement campaign could be experimented with in a split test. The precise factors to evaluate during split tests vary based on the type of campaign you’re running (i.e. paid social, paid search, email, push notifications, etc.), but some of the most common elements include:

Deep Link Target

Deep linking is a highly useful re-engagement tool. Because users already have your app installed, you have the ability to connect them directly to a relevant screen deep within your app. Depending on your re-engagement goal, determining which screen to link users to may not be intuitively obvious. Experimenting with the deep link location (i.e. the screen the user is directed to after clicking on the ad) and measuring the impact on conversion, is a perfect factor to evaluate through split testing.

Image

Ads that include images are known for having relatively high click-through rates (CTR). For example, one email marketing study found that campaigns with images had a 42 percent higher CTR than campaign without images. Some platforms enable you to upload multiple images which are automatically rotated throughout the duration of the campaign. Utilizing these types of resources is a good way to get a sense for which types of images are best suited for driving re-engagement.

Call-to-action (CTA)

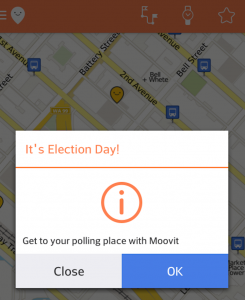

The call-to-action is the element of your ad where you actually invite the user to take a specific action. For example, in this re-engagement push notification from transportation app Moovit the CTA is “OK.” Marketers could split test to determine if a CTA like “Plan Trip” or “See Route” results in more clicks.

Text

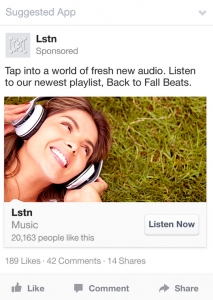

Most mobile re-engagement ad formats include a short, customizable message. For example, the two lines of text directly above the image in this Facebook re-engagement ad is determined by the marketer and could be experimented with in a split test. Split testing text enables you to identify the message that resonates with your users the most, which not only leads to higher click-through rates, but also gives you insight into the preferences of your audience.

Subject Line

The subject line is an important split testing element when driving re-engagement through email marketing. You might experiment with wording, appearance, or length, or by including an element of personalization (i.e. including the user’s name). Considering that up to 66 percent of email are opened on a mobile device, email marketing can be a valuable strategy for mobile re-engagement (especially when it’s combined with deep linking).

Timing

Split testing the timing of your re-engagement campaigns enables you to identify the optimal time to reach out to your users. For example, you might consider split testing to determine whether a push notification results in higher levels of re-engagement if it’s sent mid-morning or in the afternoon, or if a campaign performs higher on Mondays vs. Wednesdays. When you’re split testing timing, it’s important to note that the ad design (i.e. text, image, CTA, etc.) are the same in both campaigns. In other words, campaign A and campaign B use the exact same ad, they’re just sent at different times of the day or week.

Selecting your Audience

Re-engagement ads are targeted at users who have not interacted with your app for an extended period. Defining which users are good candidates for re-engagement varies from vertical to vertical. For example, a gaming app might choose to target inactive users after ten days of inactivity, whereas, a personal tax app might go several months before reaching out to dormant users. Analyzing in-app data should help you determine the length of inactivity that qualifies a user for re-engagement for your specific app.

After your targeted users have been identified, they need to be segmented in preparation for the split test. Some of the most common segmenting methods include:

10/10/80 Split:

• 10 percent of users receive campaign A

• 10 percent of users receive campaign B

• Data is analyzed and the winning campaign selected

• The remaining 80 percent of users receive the winning campaign

80/20 Split:

• 80 percent of users receive campaign A

• 20 percent of users receive campaign B

• Data is analyzed and the winning campaign selected

• If additional split testing is needed, the winning campaign would act as campaign A (and be sent to 80 percent of users) and compared against a new campaign B (sent to 20 percent of users)

50/50 Split:

• 50 percent of users receive campaign A

• 50 percent of users receive campaign B

• Data is analyzed and the winning campaign selected

• If additional split testing is needed, the winning campaign would act as campaign A (and sent to 50 percent of users) and compared against a new campaign B (sent to 50 percent of users)

If you’re testing general design concepts (like the template of a re-engagement email), starting with a 50/50 split may be a good alternative. But if you’re working with well established paid social or paid search designs, starting with a 10/10/80 or 80/20 split may be more advantageous.

Declaring a Winner

The click-through rate (CTR) is the primary metric used to determine which campaign is more successful in a split test. The CTR is calculated by taking the number of ad clicks, and dividing it by the number of impressions (the number of times an ad is displayed). For example:

• If campaign A was displayed to 5,000 dormant users and resulted in 200 clicks, the CTR would be 200/5,000 = 0.04 or 4%.

• If campaign B was also displayed to 5,000 dormant users and resulted in 450 clicks, the CTR would be 450/5,000 = 0.09, or 9%.

In this example campaign B with a 9% CTR would be considered the winner.

Understanding the Data

Before you make campaign changes based on the results of your split tests, it’s important to ensure your data is statistically significant. In other words, from a mathematical perspective, did the changes you made actually contribute to more clicks, or were the additional clicks a product of random luck or some other factor?

The easiest way to determine statistical significance is by using a split testing significance calculator. There are several free calculator options on the web which simply require you to enter two values: the number of users who viewed your ad, and the number that clicked the ad, and the calculator will indicate whether your campaign was statistically significant.

Using the scenario above as an example, if campaign A resulted in 425 clicks per 5,000 impressions (8.5% CTR), and campaign B resulted in 450 clicks per 5,000 impressions (9% CTR), even though campaign B is higher, the difference between campaign performance is so minor that you would not be able to draw any mathematically valid conclusions from the test.

Keeping Conversions in Mind

Effective re-engagement campaigns are designed with clear conversion goals that go beyond just getting users back in your app. As you use split testing to refine your campaigns, don’t get so locked into the CTR that you lose sight of your ultimate goal – conversions. Through split testing you might be able to design ads with incredible click-through rates, but if those ads aren’t leading to conversions, you may need to step back and identify factors outside of the campaign that may be contributing to the low performance.

In Summary

Split testing is a marketing technique that can help you optimize your mobile re-engagement campaigns. Through incremental changes to individual factors like the deep link target, image, call-to-action, text, subject line, or timing, you can identify the optimal way to connect with your existing users and increase engagement. Focusing on a single characteristic will enable you to more accurately attribute changes in campaign performance, and better understand user preferences.

There are multiple methods for targeting and segmenting your dormant users. Your app vertical and the purpose of your campaign will give you some guidance on what strategy is most appropriate. The winning campaign is determined by the click-through rate, but it’s important to ensure your data is statistically significant before making any campaign adjustments. Ultimately, split testing is all about increasing conversions. Through incremental improvements you can refine your mobile marketing campaigns and activate your dormant users.

![]() This article is part of the Mobile Marketing Essentials series.

This article is part of the Mobile Marketing Essentials series.

Author

Becky is the Senior Content Marketing Manager at TUNE. Before TUNE, she handled content strategy and marketing communications at several tech startups in the Bay Area. Becky received her bachelor's degree in English from Wake Forest University. After a decade in San Francisco and Seattle, she has returned home to Charleston, SC, where you can find her strolling through Hampton Park with her pup and enjoying the simple things in life.

Leave a Reply

You must be logged in to post a comment.